신호처리

[신호 처리] 2. Deep Learning for audio 1

mhiiii

2024. 3. 28. 23:37

728x90

DL은 DNN을 사용하는 ML subset

Deep learning은 언제 사용해야 할까?

- Very large dataset

- Complex problems

- intensive resourve

DL applications in audio

- Speech recohnition

- Voice-based emotion classification

- Noise recognition

- ...

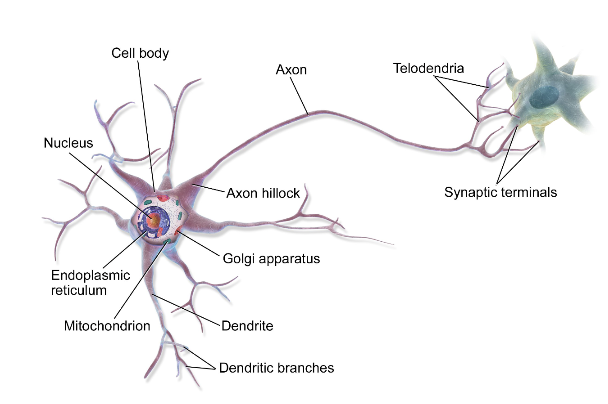

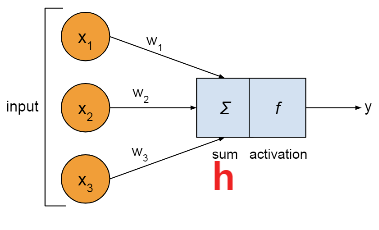

Implementing an artificial neuron with Python

h = ∑(i) xi * wi = x1*w1+ x2*w2 + x3*w3

y = f(h) = f(x1*w1 + x2*w2 + x3*w3)

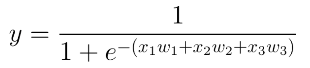

만약 activation 함수가 sigmoid라면

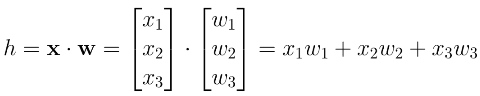

vector로 표현

구현 코드

import math

def sigmoid(x):

"""Sigmoid activation function

Args:

x (float): Value to be processed

Returns:

y (float): Output

"""

y = 1.0 / (1 + math.exp(-x))

return y

def activate(inputs, weights):

"""Computes activation of neuron based on input signals and connection

weights. Output = f(x_1*w_1 + x_2*w_2 + ... + x_k*w_k), where 'f' is the

sigmoid function.

Args:

inputs (list): Input signals

weights (list): Connection weights

Returns:

output (float): Output value

"""

h = 0

# compute the sum of the product of the input signals and the weights

# here we're using pythons "zip" function to iterate two lists together

for x, w in zip(inputs, weights):

h += x*w

# process sum through sigmoid activation function

return sigmoid(h)

if __name__ == "__main__":

inputs = [0.5, 0.3, 0.2]

weights = [0.4, 0.7, 0.2]

output = activate(inputs, weights)

print(output)

MLP 구현 코드

import numpy as np

class MLP(object):

"""A Multilayer Perceptron class.

"""

def __init__(self, num_inputs=3, hidden_layers=[3, 3], num_outputs=2):

"""Constructor for the MLP. Takes the number of inputs,

a variable number of hidden layers, and number of outputs

Args:

num_inputs (int): Number of inputs

hidden_layers (list): A list of ints for the hidden layers

num_outputs (int): Number of outputs

"""

self.num_inputs = num_inputs

self.hidden_layers = hidden_layers

self.num_outputs = num_outputs

# create a generic representation of the layers

layers = [num_inputs] + hidden_layers + [num_outputs]

# create random connection weights for the layers

weights = []

for i in range(len(layers)-1):

w = np.random.rand(layers[i], layers[i+1])

weights.append(w)

self.weights = weights

def forward_propagate(self, inputs):

"""Computes forward propagation of the network based on input signals.

Args:

inputs (ndarray): Input signals

Returns:

activations (ndarray): Output values

"""

# the input layer activation is just the input itself

activations = inputs

# iterate through the network layers

for w in self.weights:

# calculate matrix multiplication between previous activation and weight matrix

net_inputs = np.dot(activations, w)

# apply sigmoid activation function

activations = self._sigmoid(net_inputs)

# return output layer activation

return activations

def _sigmoid(self, x):

"""Sigmoid activation function

Args:

x (float): Value to be processed

Returns:

y (float): Output

"""

y = 1.0 / (1 + np.exp(-x))

return y

if __name__ == "__main__":

# create a Multilayer Perceptron

mlp = MLP()

# set random values for network's input

inputs = np.random.rand(mlp.num_inputs)

# perform forward propagation

output = mlp.forward_propagate(inputs)

print("Network activation: {}".format(output))

Reference

https://github.com/musikalkemist/DeepLearningForAudioWithPython/tree/master

GitHub - musikalkemist/DeepLearningForAudioWithPython: Code and slides for the "Deep Learning (For Audio) With Python" course on

Code and slides for the "Deep Learning (For Audio) With Python" course on TheSoundOfAI Youtube channel. - musikalkemist/DeepLearningForAudioWithPython

github.com

728x90